group-telegram.com/speechtech/1996

Last Update:

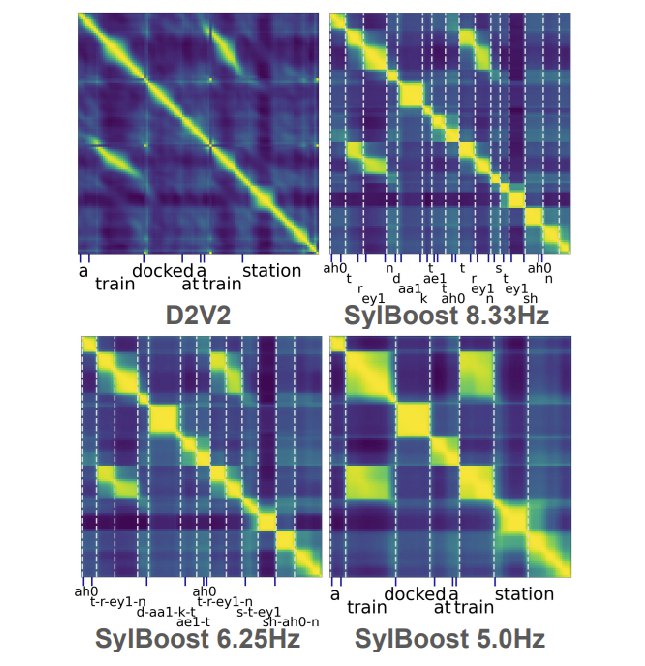

5Hz tokenization for better performance of speech LM

SyllableLM

https://twitter.com/BaadeAlan/status/1844148297562538479

Q: Why can't we get GPT-level understanding from language models on speech?

A: We need better speech tokens!

SyllableLM beats kyutai_labs Moshi on semantic understanding in 70 hours of training by making speech tokens at 5 frames/s

https://github.com/AlanBaade/SyllableLM

https://arxiv.org/abs/2410.04029

SyllableLM: Learning Coarse Semantic Units for Speech Language Models

Alan Baade, Puyuan Peng, David Harwath

Language models require tokenized inputs. However, tokenization strategies for continuous data like audio and vision are often based on simple heuristics such as fixed sized convolutions or discrete clustering, which do not necessarily align with the semantic structure of the data. For speech in particular, the high resolution of waveforms (16,000 samples/second or more) presents a significant challenge as speech-based language models have had to use several times more tokens per word than text-based language models. In this work, we introduce a controllable self-supervised technique to merge speech representations into coarser syllable-like units while still preserving semantic information. We do this by 1) extracting noisy boundaries through analyzing correlations in pretrained encoder losses and 2) iteratively improving model representations with a novel distillation technique. Our method produces controllable-rate semantic units at as low as 5Hz and 60bps and achieves SotA in syllabic segmentation and clustering. Using these coarse tokens, we successfully train SyllableLM, a Speech Language Model (SpeechLM) that matches or outperforms current SotA SpeechLMs on a range of spoken language modeling tasks. SyllableLM also achieves significant improvements in efficiency with a 30x reduction in training compute and a 4x wall-clock inference speedup.

BY Speech Technology

Share with your friend now:

group-telegram.com/speechtech/1996