Another cool work from OpenAI: Diffusion Models Beat GANs on Image Synthesis.

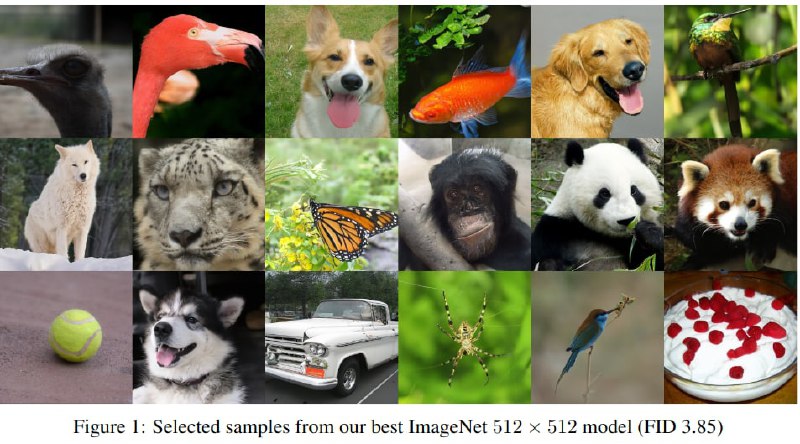

New SOTA for image generation on ImageNet

A new type of generative models is proposed - Diffusion Probabilistic Model. The diffusion model is a parameterized Markov chain trained using variational inference to generate samples matching data after finite time. The diffusion process here is a Markov chain that gradually adds noise to the data in the opposite direction of sampling until signal is destroyed. So here we are learning reverse transitions in this chain, which reverse the diffusion process. And of course, we parameterize everything with neural networks.

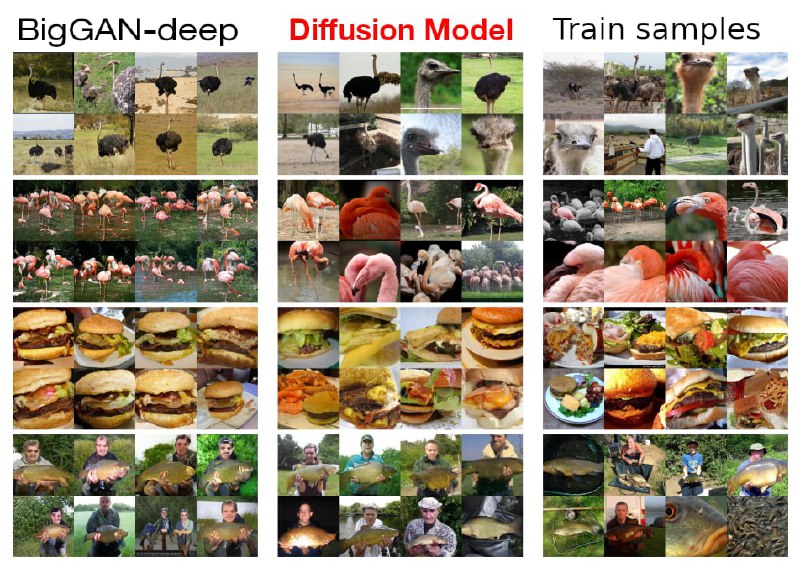

It produces very high-quality generations, even better than with GANs (it is especially clearly seen on the man with a fish, who is not that spectacular in the BigGAN model). The current disadvantage of diffusion models is slow training and inference.

📝 Paper

⚙️ Code

New SOTA for image generation on ImageNet

A new type of generative models is proposed - Diffusion Probabilistic Model. The diffusion model is a parameterized Markov chain trained using variational inference to generate samples matching data after finite time. The diffusion process here is a Markov chain that gradually adds noise to the data in the opposite direction of sampling until signal is destroyed. So here we are learning reverse transitions in this chain, which reverse the diffusion process. And of course, we parameterize everything with neural networks.

It produces very high-quality generations, even better than with GANs (it is especially clearly seen on the man with a fish, who is not that spectacular in the BigGAN model). The current disadvantage of diffusion models is slow training and inference.

📝 Paper

⚙️ Code

group-telegram.com/gradientdude/294

Create:

Last Update:

Last Update:

Another cool work from OpenAI: Diffusion Models Beat GANs on Image Synthesis.

New SOTA for image generation on ImageNet

A new type of generative models is proposed - Diffusion Probabilistic Model. The diffusion model is a parameterized Markov chain trained using variational inference to generate samples matching data after finite time. The diffusion process here is a Markov chain that gradually adds noise to the data in the opposite direction of sampling until signal is destroyed. So here we are learning reverse transitions in this chain, which reverse the diffusion process. And of course, we parameterize everything with neural networks.

It produces very high-quality generations, even better than with GANs (it is especially clearly seen on the man with a fish, who is not that spectacular in the BigGAN model). The current disadvantage of diffusion models is slow training and inference.

📝 Paper

⚙️ Code

New SOTA for image generation on ImageNet

A new type of generative models is proposed - Diffusion Probabilistic Model. The diffusion model is a parameterized Markov chain trained using variational inference to generate samples matching data after finite time. The diffusion process here is a Markov chain that gradually adds noise to the data in the opposite direction of sampling until signal is destroyed. So here we are learning reverse transitions in this chain, which reverse the diffusion process. And of course, we parameterize everything with neural networks.

It produces very high-quality generations, even better than with GANs (it is especially clearly seen on the man with a fish, who is not that spectacular in the BigGAN model). The current disadvantage of diffusion models is slow training and inference.

📝 Paper

⚙️ Code

BY Gradient Dude

Share with your friend now:

group-telegram.com/gradientdude/294